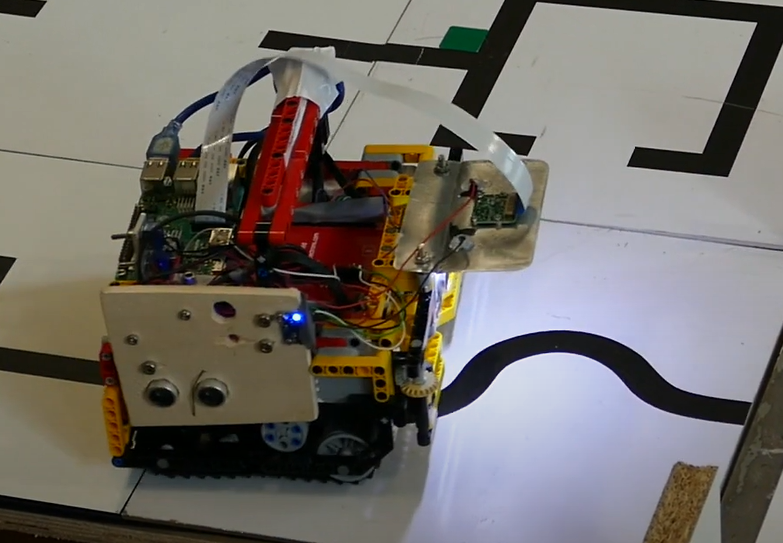

In the previous season, we encountered problems with a conventional light sensor array, primarily due to a lack of calibration, leading to inconsistent measurements during competitions. Consequently, in 2020, we took a significant step forward by adopting a camera-based approach for line following. The camera interfaced by the Raspberry Pi, evaluated each frame and transmitted information to an Arduino UNO via USB. The UNO then processed the data to determine optimal motor speeds while also driving the LEGO motors via an EvShield. As we were constrained by time limitations, we were unable to implement a proper search algorithm for locating victims. Instead, we adopted a strategy of navigating the evacuation zone in a randomized manner, while employing the encoders in the LEGO motors to detect the presence of victims within the robot's arm.